GPGPU: Is a Supercomputer Hiding in Your PC?

By Chris Seitz.Date: Jul 1, 2005.

Article Description

Growth of the GPU

Were you aware that the most powerful chip in an average or better PC is not the CPU, but the GPU (graphics processing unit)? More than likely, you had an inkling that some serious technology was driving your graphics card, and over the past couple years saw the accoutrements of raw power appear: larger fans and heatsinks, and even supplementary power connectors. If you're on the bleeding edge, you may have painstakingly built a benchmark-crushing PC with a water-cooling system. The growing rage is a single PC with multiple GPUs in a scalable link interface (SLI) configuration.

Nowadays, high-performance GPUs have become more rational, with quiet thermal solutions and reasonable power requirements. But the ever-advancing video game industry entices consumers with a consistent and growing hunger for higher performance. This mass market drives a tremendous technology arms race in the GPU industry, with consumers being the overall winners. In turn, low prices for high-performance GPUs provide a great opportunity for software developers and their customers, letting them capitalize on otherwise idle transistors for their computational needs.

Perhaps management won't pay for you to use all that GPU performance during the workday, and the only exercise your powerful, computationally starved GPU gets is your lunchtime and after-work gaming sessions. That simply isn't fair to all those transistors.

So how do you convince your boss that you really need to buy the latest GPU? In fact, the argument really isn't difficult—provided that your programmers are willing and able to recast your problems to fit the massively parallel architecture of the GPU. If you can stay on the computational superhighway of the GPU, you'll be able to unleash tremendous gigaflops. Naturally, today's graphically intense applications do exactly that, using one of the two primary APIs for programming these chips: the cross-platform OpenGL API, or Microsoft's Direct3D component of the DirectX API. For example, leading games have inner loops with core algorithms that run "shaders" across an array of pixels. A shader takes in a variety of inputs—geometry and light positions, texture maps, bump maps, shadow maps, material properties, and other data—for special effects such as fog or glow. It then computes a resulting color (and possibly other data, such as transparency and depth). All this action happens for each pixel on the screen, at 60 or more frames per second—a massive amount of computation.

At its core, this processing is similar to how most computer-animated and special effects–rich movies, such as Shrek, are produced. A C-like high-level programming language is used to write shaders, and then a commercial or in-house rendering program chugs away, computing scenes on the film studio's render farm. Often comprising thousands of CPUs, these render farms handle the enormous amounts of geometry and texture data required to hit the increasing quality threshold demanded by discerning audiences of CG feature films. This rendering process is commonly done overnight, and reviews of the "dailies" (those scenes rendered overnight) are done the following morning.

GPUs go through a simplified version of this process 60 or more times per second, and each year the gap between real-time and offline rendering shrinks. In fact, many recent GPU technology demonstrations show real-time renderings of content that was generated offline just a few years ago.

Embarrassingly Parallel

Computer graphics have often been called "embarrassingly parallel." Large batches of vertices need to be transformed with identical or similar matrices. Large blocks of pixels need to be rendered with identical or similar shaders. Large images need to be blended to create a final image. Hence, the natural evolution of the GPU architecture has been toward a multithreaded single instruction multiple data (SIMD) machine with parallel execution units in three areas: the vertex, pixel, and raster portions of the chip. The architecture has also evolved to handle massive amounts of data on the GPU. Today, onboard memory is topping out at half a gigabyte, with additional fast access to main memory through PCI Express (theoretically at 4 GB/s in each direction). Internal memory bandwidth—between the GPU and its own local video memory—is around 40 GB/s.

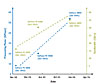

As you can imagine, the GPU is particularly well suited to definite arenas. Certain problem sets lend themselves to the current GPU architecture, and we can confidently assume that future GPUs will become more flexible and handle previously unreasonable problem sets. In other words, the GPU is often the best tool for the job, and, due to rapid increases in brute computational power (see the trend line in Figure 1), is poised to grab more and more of the high-performance computing (HPC) market. Those experiencing the greatest performance increases have problems in which the computational or arithmetic intensity of the problem is high, meaning that many math operations occur for each piece of data that's read or written. This is because most of the transistors in GPUs are devoted to computational resources, and because memory accesses are always significantly more expensive in comparison to computation. For the same reasons, the GPU excels when off-chip communication is reduced or eliminated.

Figure 1 Rapidly increasing GPU capabilities.

Over the past five years, the GPU has rapidly evolved into a programmable "stream processor." Conceptually, all data it processes can be considered a stream—an ordered set of data of the same datatype. Streams can range from simple arrays of integers to complex arrays of 4 × 4 32-bit floating-point matrices, or even arrays of arbitrary user-defined structures. The streams are fed through a kernel that applies the same function to each element of the entire stream. Kernels can operate on the data in multiple ways: [1]

- Transformation. Remapping.

- Expansion. Creating multiple outputs per input element.

- Reduction. Creating one output from multiple inputs.

- Filter. Outputting a subset of the input elements.

One particular restriction to flexibility is key to enabling parallel execution and high-performance computation: "[K]ernel outputs are functions only of their kernel inputs, and within a kernel." [2]

GPGPU Defined

An active community has evolved that focuses on GPGPU applications. GPGPU stands for general-purpose computation on a graphics processing unit. The web site http://www.gpgpu.org is a hub for this area, and does a fantastic job of cataloging the current and historical use of GPUs for general-purpose computation. Lately, a few seminars have covered this topic (GP2 and SIGGRAPH 2004, for example), and a full-day course at SIGGRAPH 2005 will focus on GPGPU.

If you're interested in diving right into GPGPU, read the recent book GPU Gems 2: Programming Techniques for High-Performance Graphics and General-Purpose Computation (Addison-Wesley, 2005, ISBN 0321335597), by Matt Pharr and Randima Fernando. The book focuses on the GPGPU space, devoting 14 chapters to this topic, covering everything from fundamentals to optimizations to real-world case studies. Starting by tackling the trends and underlying forces that drive streaming processing forward, plus detailing the low-level design of a modern GPU (excerpted here), the authors give readers a strong base in GPU fundamentals. Building upon that base, readers learn more thoroughly the detailed differences between the CPU and GPU, strengths and weaknesses of each, and how to most efficiently map computational concepts to the various portions of the GPU. Efficiently harnessing the GPU for computation is then discussed, with focus on parallel data structures, flow-control idioms, and program optimization.

GPGPU in the Real World

Most of GPU Gems 2 is structured in a "gem"-style fashion, detailing best practices and cutting-edge uses of the GPU—particularly advanced graphics techniques. But the book also provides numerous examples of how GPUs have excelled at GPGPU tasks.

A particularly interesting chapter (and perhaps profitable to certain readers) discusses computational finance and the pricing of options. GPUs are demonstrated to be far superior to CPUs for the task of efficiently determining the fair value of options (in this case, tens of thousands of options simultaneously). This task concerns Wall Street institutions intimately, in part due to a need to hold sufficiently large cash reserves to cover market fluctuations. Because this requirement necessitates borrowing large sums at overnight interest rates, accurate, timely calculations lead to less conservative, more accurate borrowing, and therefore less interest paid. This example from the world of financial engineering shows how GPUs can be extremely useful for decidedly non-graphical problems. Other examples touch on computational biology (protein structure prediction), partial differential equations (such as those used in physics simulation), sorting algorithms, fluid flow (the Lattice Boltzmann Method), and fast Fourier Transforms (FFTs) on the GPU.

The International Technology Roadmap for Semiconductors (ITRS) compiles the industry trends and has forecast a 71% increase in capability of chips year after year. [3] That incredible growth rate, compounded with the ability to build configurations with multiple GPUs operating in parallel via SLI, makes it likely that the GPU will continue to subsume functionality (previously belonging to CPUs) for increasingly mainstream computation. Chances are that opportunities exist in your own business for GPGPU computation. It's well worth exploring this field and jumping on the train soon, because it's only getting faster as GPUs increase in performance, functionality, and programmability.

References

[1] GPU Gems 2, Chapter 29.

[2] GPU Gems 2, p. 465.

[3] GPU Gems 2, p. 458.

Courtesy: Informit

0 Comments:

Post a Comment

<< Home